BY laine Lee

You may not know it, but as a product designer, you most likely have been designing for products that use some form of artificial intelligence.

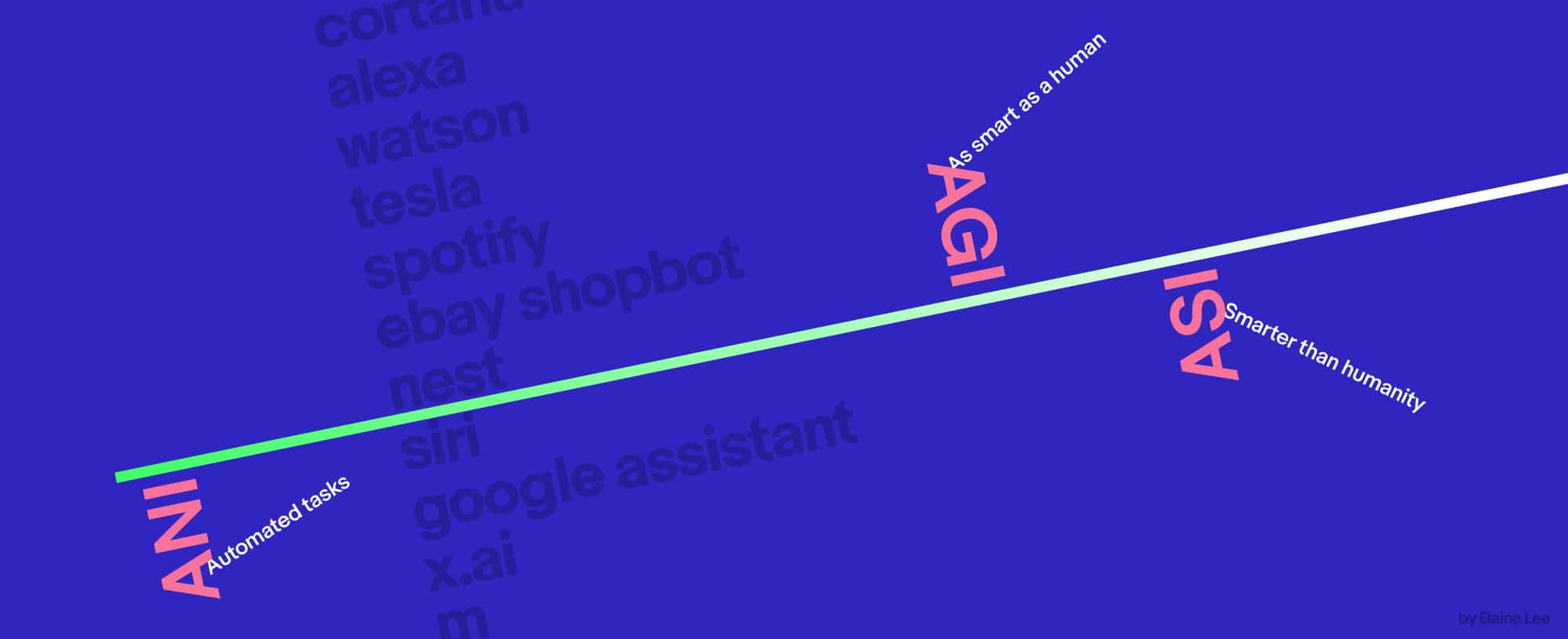

Specifically, these products are working within the first stage of AI, artificial narrow intelligence. We, designers, play a key role in shaping how AI will develop. We already have the necessary skills to push it forward. My next post will touch on how design makes AI smarter.

But first, it’s beneficial to understand where we are at in the three stages of AI to be better informed on how we can use design to improve AI.

Stage 1: Artificial narrow intelligence (ANI)

Products that run on artificial narrow intelligence can perform specific automated tasks extremely well, but they are unable to apply that knowledge to tasks outside of their realm.

Voice assistants like Google Home and Apple’s Siri use ANI to listen to and answer with what they understand. Amazon, Spotify, Netflix use ANI to recommend things that we did not know we would like. Bots like eBay ShopBot run on ANI to find us products by chatting with it. X.ai’s Amy and Andrew use ANI to coordinate schedules so all we need to do is show up. Smart home devices and self-driving cars also run on ANI to perform very specific tasks.

ANI eliminates mundane tasks, allowing us more immediate gratification and time to spend on what we actually enjoy doing. It facilitates decision making so we can make an educated choice.

In the case of eBay ShopBot, the chatbot I’ve been designing for at eBay, it does the tedious part of shopping, like research and digging, so users can just do the fun part.

We, designers, have been creating user flowcharts, if-this-then-that scripts, and templates for dynamic information to populate. We design ways for users to indicate explicitly if they like or dislike something and ways for the computer to understand implicitly what users are interested in. These are some examples of how we’re currently designing for ANI.

Stage 2: Artificial general intelligence (AGI)

When we have built an AI that reaches our level of smartness, understands the world as we do, and thinks abstractly, it is considered to have artificial general intelligence. AGI is adaptive and applies its knowledge to whatever it wants. It can think and act for itself, solving any problem as an adult human can.

Although there aren’t any real-world examples yet, many have written about using AGI to enhance our lives and its ability to compete with us. We can imagine a smarter Google Home, Alexa, and Siri understanding our intent in our own languages, considering everything that we did not say, and making the best decisions for us.

AGI may manifest itself as something that looks and feels like us. It will outsmart us unless you are the smartest person in the world. Even then, slim chance. It can outperform us, reaching objectives faster. But, it can also do work that is unsafe for us or just work that we don’t want to do.

Efficiency, accuracy, and safety are huge benefits of having AGI. I believe what’s even more valuable is the ability for the AGI to keep us from being lonely. How do we build a trusting, harmonious relationship between the personification of AGI and us?

My next post will talk about how we can design experiences that get us closer to AGI.

Stage 3: Artificial superintelligence (ASI)

When AI exceeds the smartest human mind, from ever so slightly to exponentially more intelligent than humanity, it has reached superintelligence. ASI will learn, improve, and build upon itself faster and at a higher capacity than we can imagine. Its knowledge will no longer be based off of the human brain. It will reach a level of intelligence that we won’t be able to understand.