Voice prototyping has come to Adobe XD in its latest update, making it the first and only UX/UI platform to seamlessly connect screen and voice prototyping in one app. Speech, of course, is fundamental to the way we communicate; talking to a voice interface is different from how we interact with a screen and a graphical interface.

We’ve already explored some of the best practices to keep in mind when using the new feature, and in this article leading voice experts offer their advice on improving the UX of voice experiences.

“When a user sets an alarm via an app, it’s pretty straightforward,” Cathy Pearl, head of conversation design outreach at Google and author of Designing Voice User Interfaces, explains. “Open the app, select a date and time, and you’re good to go. But when a user sets an alarm via voice, it gets more complicated.”

Imagine all the ways you can set an alarm for 8 a.m:

- Umm, can you please set my alarm for tomorrow morning at 8?

- Alarm 8 a.m.

- Wake me up at 8

- I need a timer for 8 a.m. tomorrow

- Set my morning timer for 8 o’clock

“Because of this, prototyping your voice experience is absolutely crucial,” Cathy points out. “You need a way to gather possible responses from real people before you commit to coding.”

“For designers, voice introduces new workflows, opportunities, and obstacles in our design,” agrees Susse Sønderby Jensen, an experience designer at Adobe working on XD’s voice prototyping features.

“From crafting sample dialogue and creating engagement to conquering biases and building empathy, these are new challenges we designers face as we dive into designing for this new interface.”

Read sample dialogues out loud

Even though prototyping can be tough, you can start with very lightweight solutions to design voice-first experiences. Cassidy Williams, who has just left her position of head of developer voice programs at Amazon to join CodePen, focuses on two key areas when she designs a conversation.

“As you write it down, it’s almost guaranteed it will read differently than it sounds out loud,” she explains. “The key is to continuously read it out loud (or play it out loud), and as you change and adjust it, make sure you are listening to it rather than just reading. You’ll hear nuances of words and sentences that just aren’t as natural when spoken out loud, and it’s important to catch those early!”

Susse Sønderby Jensen agrees: “Any good user experience starts with a simple user flow. For voice experiences, this is called a sample dialogue. Write out a simple back-and-forth between the user and the voice interface — this is also referred to as the happy path for your experience.”

Keep in mind, Susse advises, for any voice experiences that are not accompanied by a screen, there is no GUI or menu to lean up against, and everything happens in a sequence (also referred to as a flat UI). You have to guide your user through a flow by giving them clear options and setting expectations up front. Test your voice prototype by talking to it as early as you can, so you can iterate on the responses and requests to improve the user experience.

Provide clear options

Cassidy also recommends giving options for different chunks of the conversation you’re putting together — for every type of response. “We all know users are finicky, especially so in voice!,” she laughs. “Add words on occasion to express empathy (in a travel app, for example, you could say, ‘ooh, sounds like a fun trip!’ before moving to the next step in the conversation), and have a response that’s always slightly different to the same feedback. It keeps it natural and engaging.”

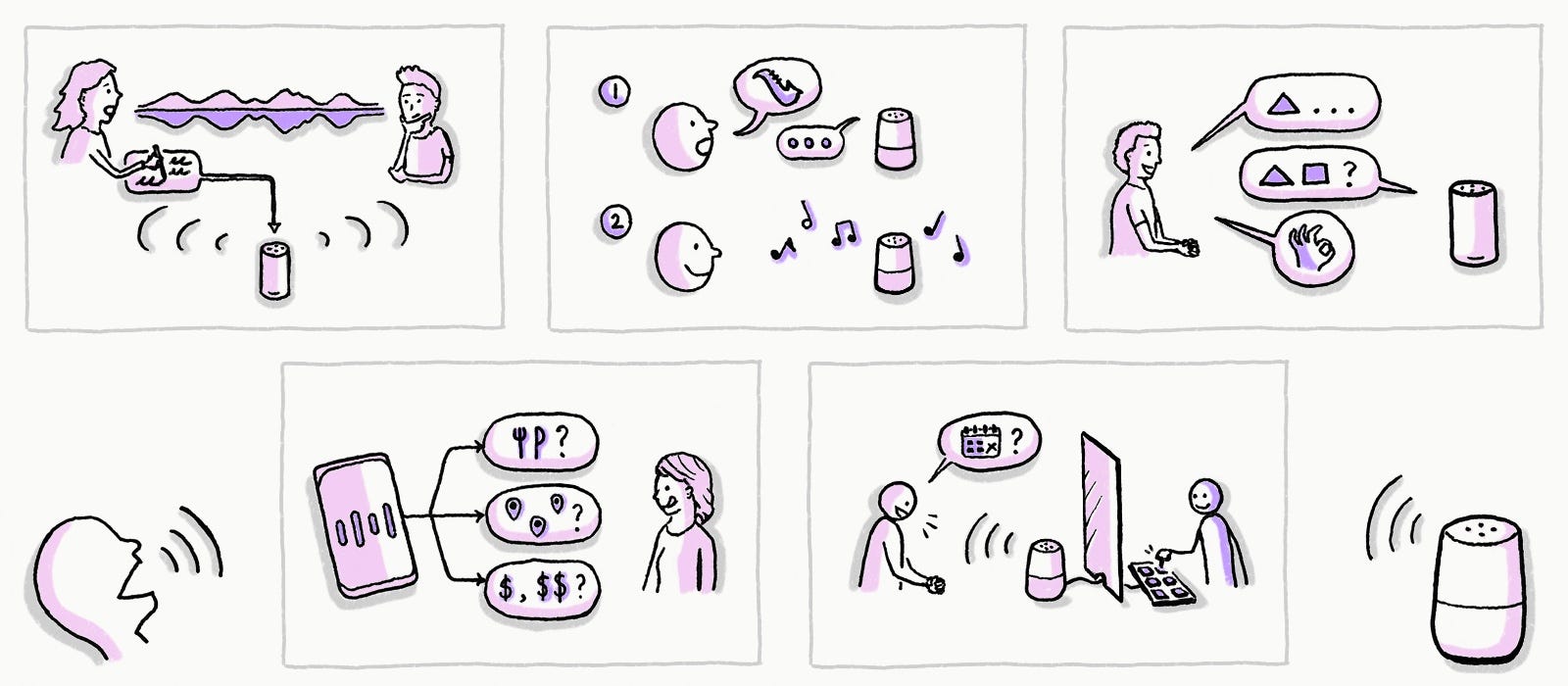

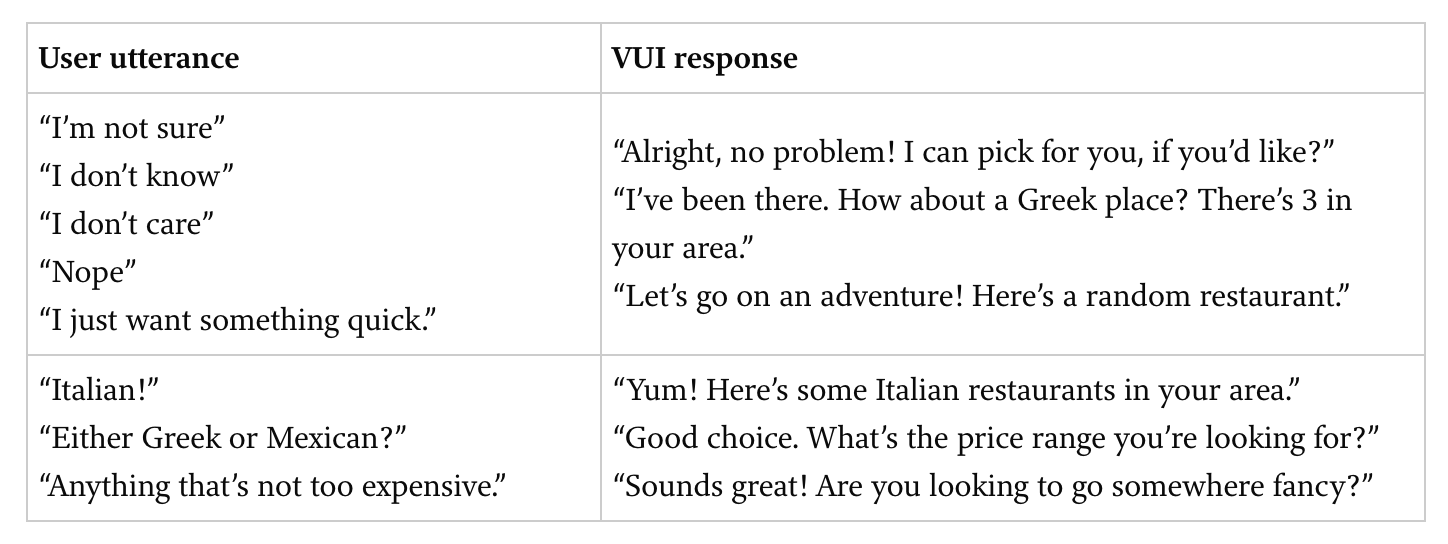

If a user is trying to decide which restaurant to eat at, for instance, after asking which cuisine they prefer, Cassidy suggests mapping out something like this:

In this example, you offer options ranging from picking for the user, to pulling more details out of them.

“By changing it up, it flows more naturally, and still provides options for the user to speak more,” Cassidy explains. “You never know what a user might say, so practicing a conversation, mapping it out, and adding options as you explore the concept is the ideal way to get a solid voice design finished.”

Susse Sønderby Jensen also points out that you can avoid errors by providing options and giving users a chance to narrow in on what they’re looking for:

User: Hey, Google, what’s the tallest building in Arlington?

Google: Did you mean Arlington in Virginia, or Arlington in Texas?

Keep it short and grow with your users

As we use different comprehension skills when we read and listen, Susse also advises keeping voice responses short and simple. If you give users options, for example, keep these to a minimum, because it’s hard to remember more than three things at a time and interactions through voice should be time efficient.

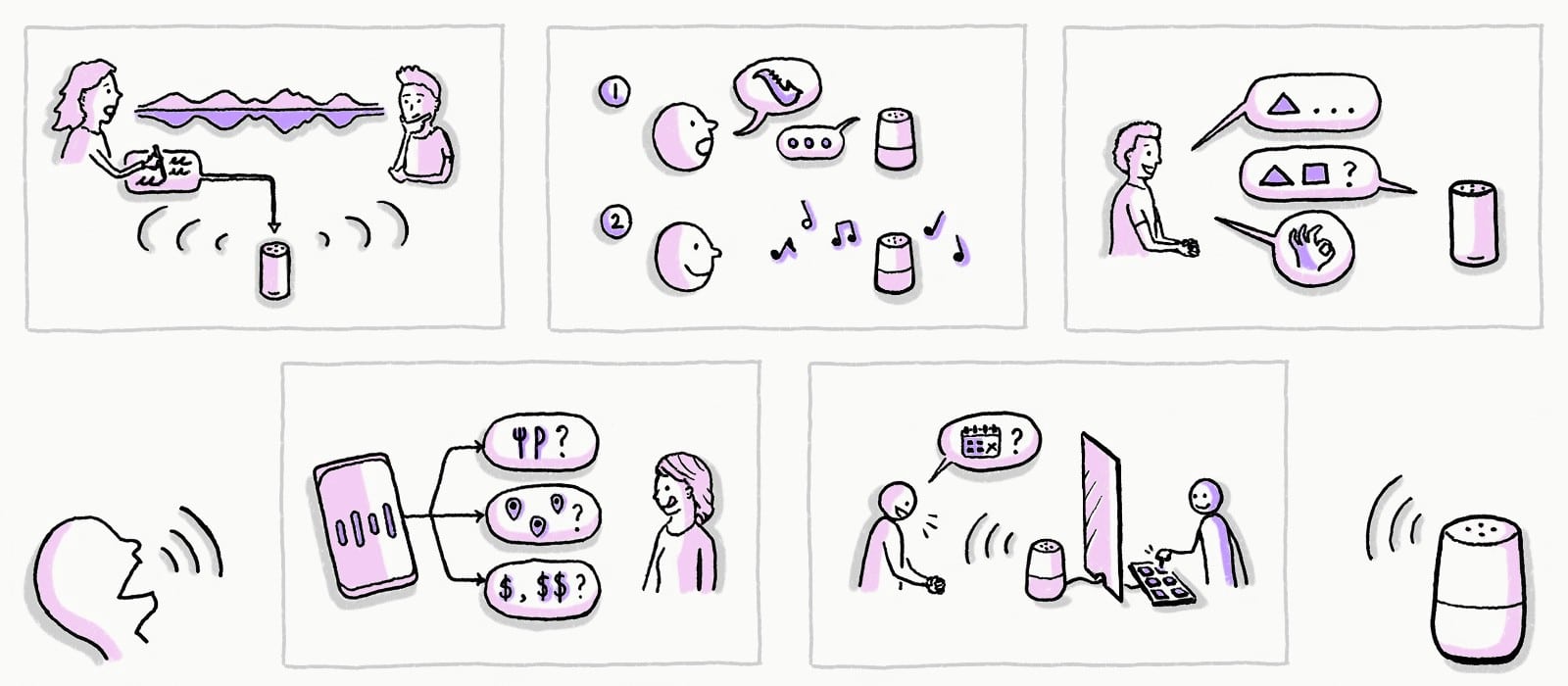

“For novice users, it can also be helpful to repeat back what users are asking for,” Susse suggests. “This helps make users feel understood and more important, and in cases where the system misunderstood an intent, it’s a chance to get the conversation back on track again. And when users interact with a voice assistant on a device, such as a Google Home or an Amazon Echo, lights and chimes are essential in accompanying the conversation and guiding users through their voice experience.”

Meanwhile, If you design for expert users, who are already comfortable with the interface, you can peel away a lot of the affordances. Instead of the system saying ‘Now playing Die Young by Sylvan Esso’ every time, for instance, it can just simply play the song.

Move fast and speak things: the Wizard of Oz method

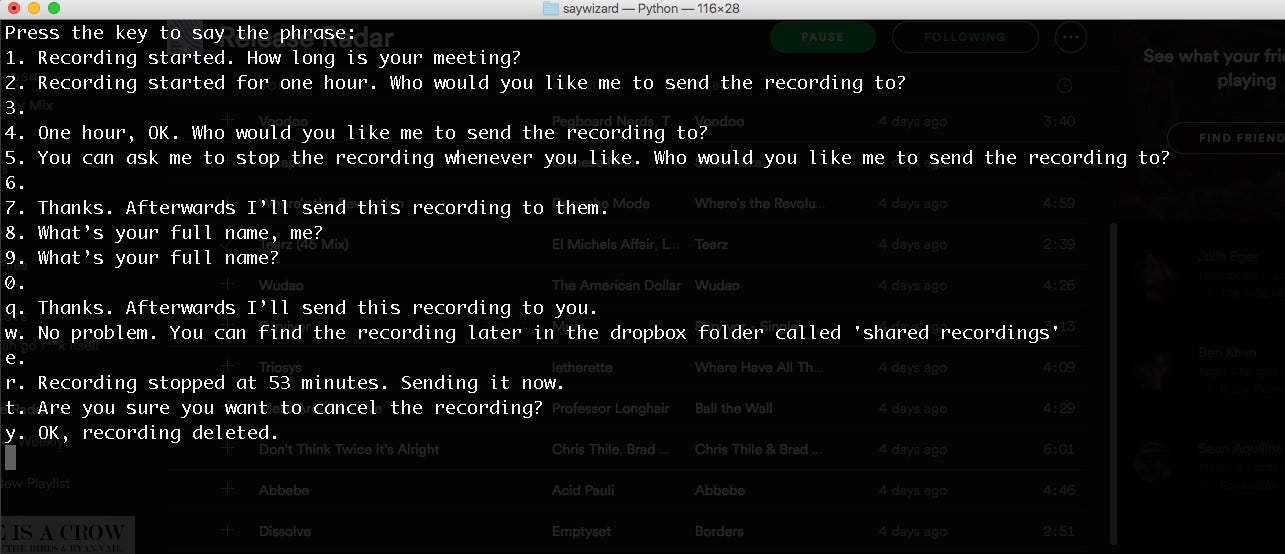

You can also try a ‘Wizard of Oz’ approach, in which the ‘wizard’ (the human) plays text-to-speech responses to what a user says. As voice UI and design strategist Ben Sauer explains, it can actually be absurdly easy and fun to prototype using this method.

“If you can imagine a conversation, type it up and you’re two minutes away from testing it out with a user,” Ben advises. “In a WoZ test, the participants think they’re interacting with a real system, but in fact it’s being controlled by a ‘wizard’: that’s you. It works remarkably well: just by placing an Amazon Echo in the room, while having the audio come from your laptop, most users will remain happily deluded.”

There are many tools to help you run a test like this. Ben has created Say Wizard with Abi Jones at Google — a simple tool that takes a text file, assigns each line of text to a keyboard letter, and then has your Mac read them out loud on each keypress.

“You can run tests to find out what users will say, and see if your design has the correct responses,” Ben explains. “The key is speed of iteration: changing the design is as simple as changing a text file, even between tests.”

A Wizard of Oz test is a great activity early in your designs; it will help you quickly identify where the problems in your imagined dialogue are, and how people respond. “While it won’t help you with the biggest challenge for a voice UI designer — gracefully handling errors — it will tell you how your dialogue flows for people,” Ben explains. “And that’s our most important goal: making conversation between humans and machines just… flow.”

For more on this, check out Ben’s article ‘STFU: Test Your Voice App Idea in Less Than An Hour’.

Handling errors

Most users will mispronounce some words, whether English is their first language or not. “I was designing a salad ordering Skill and gave the user the option to select a salad or a warm bowl,” Susse Sønderby Jensen remembers. “When I was running through the interface, however, it kept interpreting me saying ‘warm bowl’ as ‘wormhole’! I got around it by adding ‘wormhole’ as one of the utterances.”

Iterating on the design and testing it with users therefore is crucial, especially if you target users that are likely to be bilingual, have a speech impediment, or are kids or elderly.

To prevent users from getting lost, or rather catching them if they are lost, error cases are important tools. Setting up help responses is key, Susse advises. Make sure to remind users that they can get help if needed, as they go through a flow.

The value of voice prototyping

Google’s Cathy Pearl adds that a prototype can also quickly reveal whether the user knows if and when they can speak, which becomes increasingly important as voice experiences are available on more and more platforms, such as smart speakers, mobile phones, and smart displays.

“Prototyping is a great way to bring a shared understanding of what the experience will feel like,” Cathy points out. “Sample dialogs in written form are an essential first step, but a prototype with real voice output will really bring it home.”